Projects:

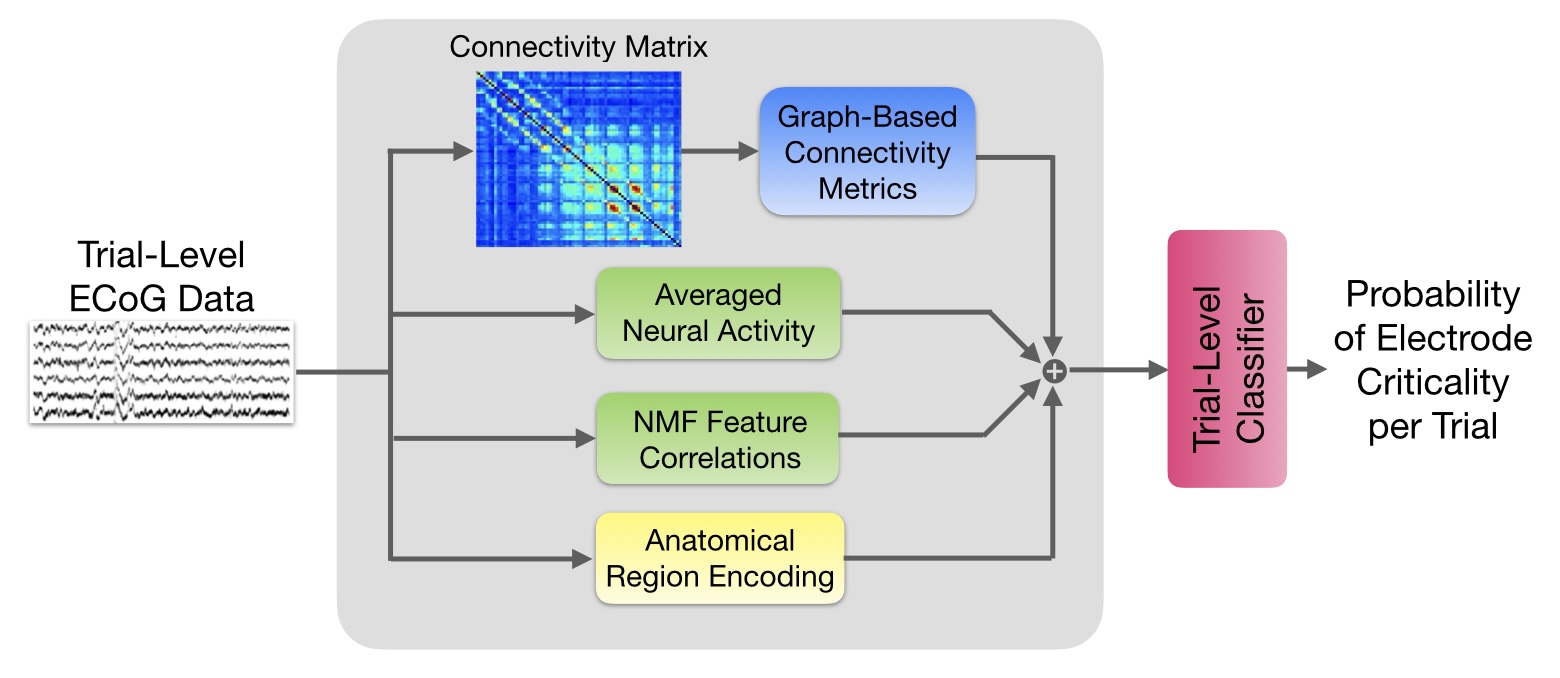

Graph-Based Machine Learning for Speech-Critical Brain Mapping

- Built predictive models for speech-critical cortical regions from ECoG data using a machine learning pipeline with anatomical and graph-based features, and a graph neural network (GNN) trained directly on connectivity graphs. Achieved up to AUC-ROC = 0.87 across subjects with feature-level interpretability.

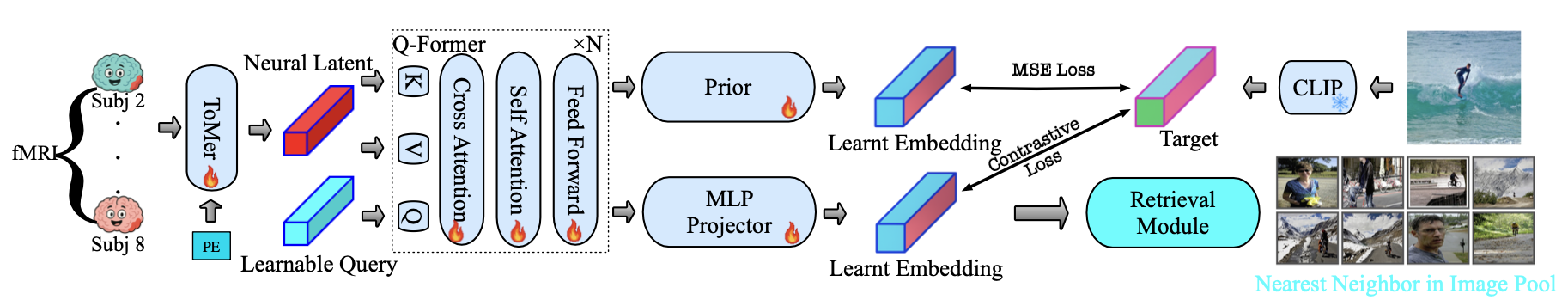

Transformer Models with Token Merging for fMRI Decoding

Figure credit: Chenqian Le

- Contributed to the development of a transformer-based framework with token merging and query-driven alignment for efficient multi-subject fMRI decoding. Achieved competitive retrieval performance with a 12–24× reduction in parameters compared to prior work.

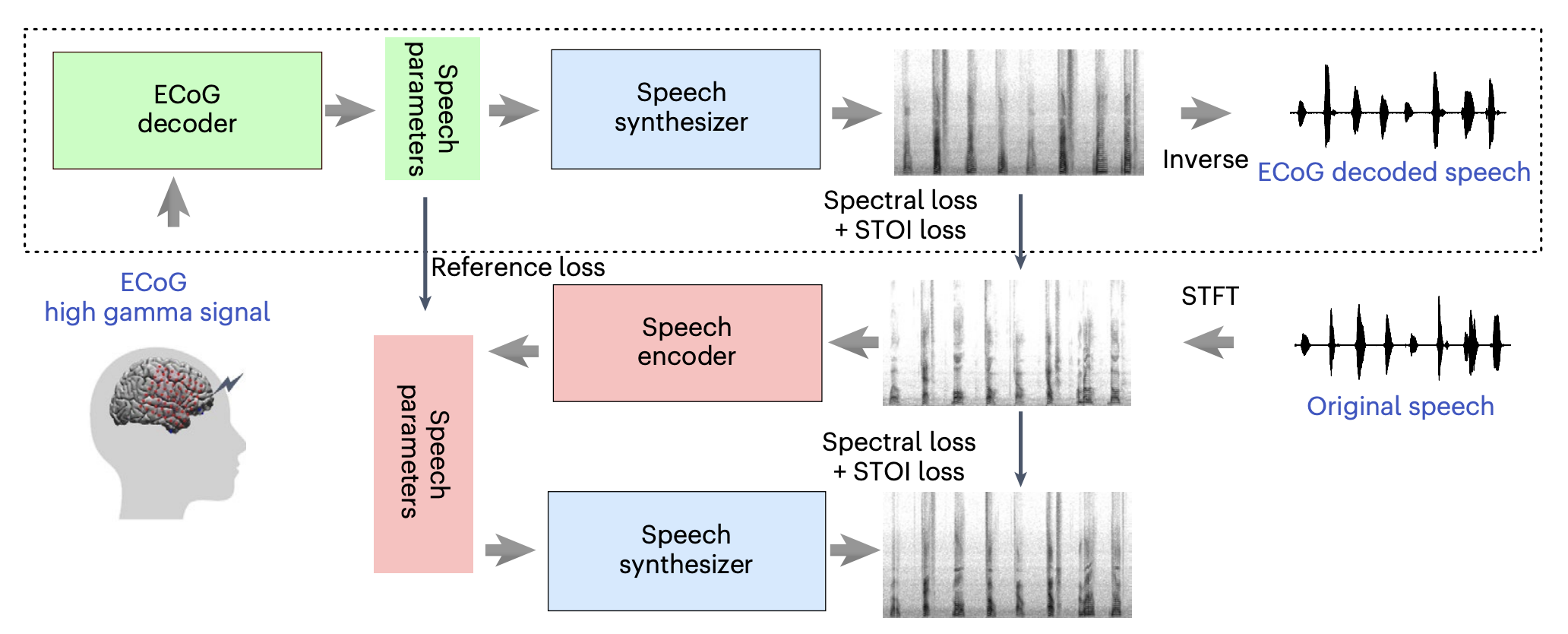

Neural Speech Decoding

Figure credit: Xupeng Chen

- Implemented a vector quantization module for integration into an existing speech decoder framework, which maps input spectrograms to speech parameters including Pitch Frequency, Formant Filter Center Frequencies, and Broadband Unvoiced Filter Frequency. Focused on implementing VQ-VAE1 and VQ-VAE2 models to optimize performance with our dataset.

- Developed a phoneme classifier using spectrograms as input data to integrate phoneme classification loss into an existing speech generator model. Evaluated several models, including MLP, simple RNN, GRU, and LSTM, to accurately classify phonemes from the spectrogram features.

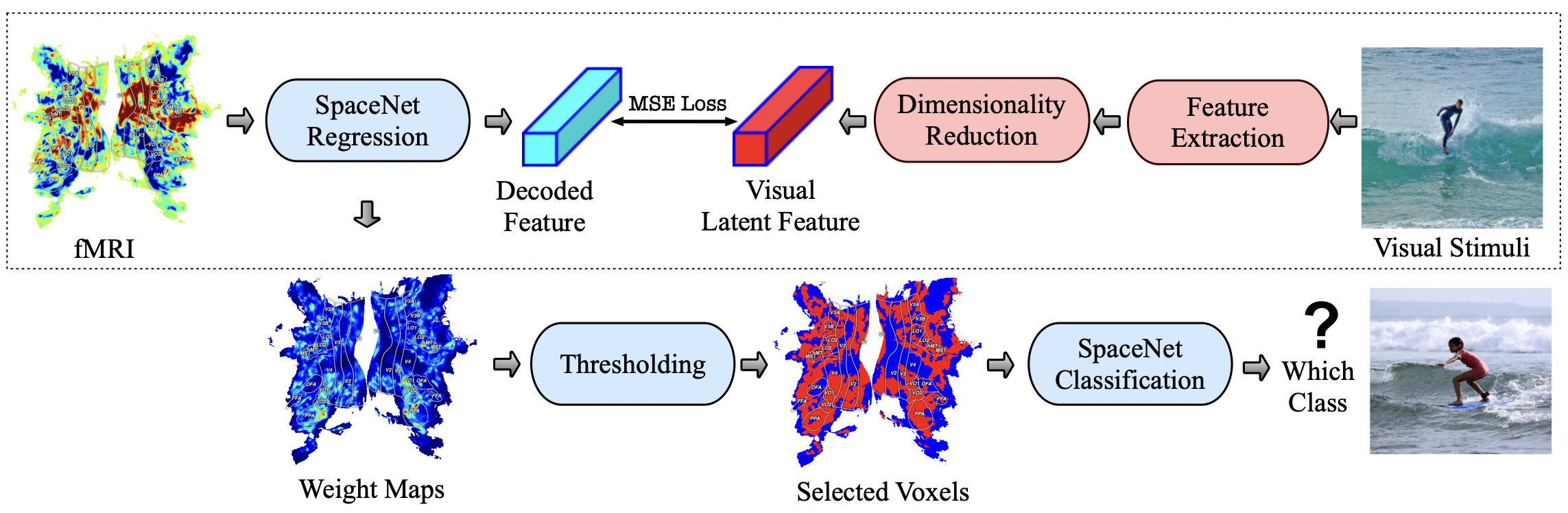

Deep Learning-Based Brain Decoding

Developed a brain decoding method using visual features from deep neural networks and the Natural Scenes Dataset. Features were extracted with ResNet-50 and DINOv2, and dimensionality was reduced using PCA and UMAP. Nilearn’s SpaceNet Decoder with Graph-Net regularization was employed to generate classification and regression weight maps from fMRI data. Find our paper here.

Handwritten Word Synthesis with GANs

Generated a dataset of handwritten Persian words by applying Generative Adversarial Networks (GANs) to a dataset of typed Persian words, initially extracted using a YOLOv5 model on typed documents.